Microsoft, Facebook take the plunge with novel cloud cooling approaches

The new technologies could allow data centers to be installed in far more remote locations than ever before. Read More

Almost four years after it began testing the idea of underwater data centers, Microsoft is sinking even more resources into the experiment.

This time, it has submerged more than 864 computer servers (and all the related gear they need to run) about 117-foot deep on the seafloor off the coast of Scotland. They’re crammed into 12 racks and housed in a 40-foot-long container that looks more like a submarine than a traditional data hall. The entire prototype runs off about 0.25 megawatt of renewable solar and wind electricity fed into it through a cable from the shore.

The high-level aim of Project Natick is to test how reliably cool ocean water can keep the technology running when faced with rigorous workloads, such as running artificial intelligence computations or handling video streaming services.

The experiment will run for one year before the data center is lifted back out of the water, collecting metrics such as power consumption, internal humidity and temperature. In theory, the data center shouldn’t require maintenance for up to five years, for those of you who are wondering about rebooting computers that “live” at depths that are just within deep-dive limits of recreational scuba divers.

But is it seaworthy?

The submarine analogy isn’t accidental: the underwater vessels used for exploration or military applications are information-hungry structures that need to balance electrical and thermal efficiency very carefully. So, Microsoft hired a 400-year-cold engineering company from France with submarine expertise to help design its container.

“At the first look, we thought there is a big gap between data centers and submarines, but in fact they have a lot of synergies,” said Eric Papin, senior vice president, chief technical officer and director of innovation for Naval Group, in a press release.

For example, the heat exchanger for the data center uses the same principles: Cold water is piped into the back of the racks and then forced back out to reduce the temperature.

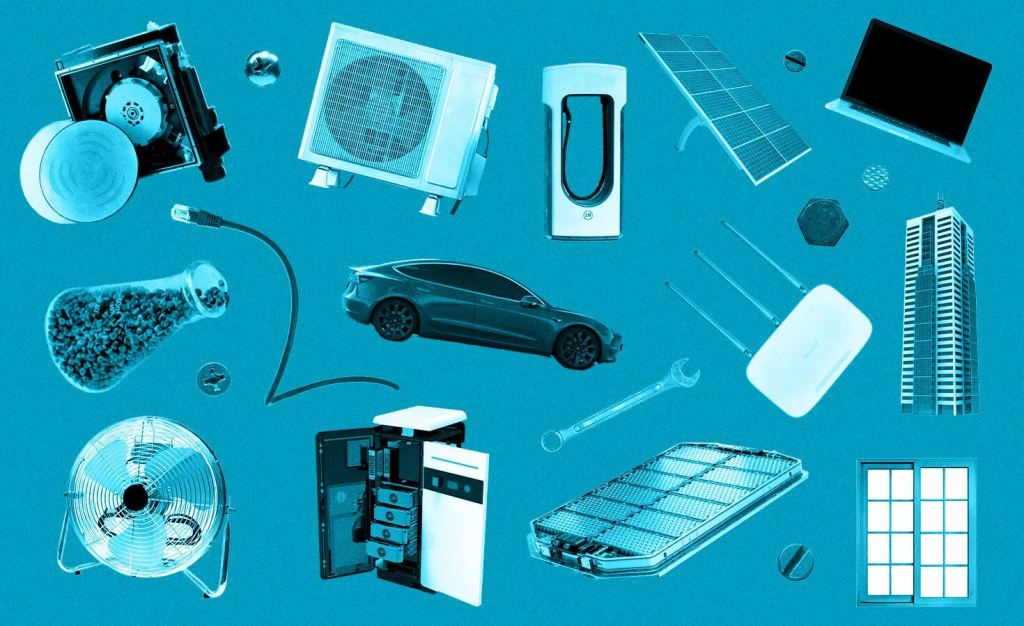

The power needs of traditional data centers are, in large part, dictated by how electricity is needed to keep the computing gear from overheated. This is usually achieved through a combination of design, such as raised floors and special ventilation approaches, and equipment such as fans and cooling towers. The ratio of power needed to run the servers versus what’s needed to keep them cool is called power usage effectiveness (PUE). The closer this is to a score of 1, the better.

The first Project Natick design was tested off California, in far calmer, more shallow waters for 105 days. Eventually, the idea is to test whether the concept will work farther out at sea, using energy generated by offshore wind turbines or, potentially, wave or tidal energy generators. There’s another potential bonus: The closer data centers are to the population they serve, the faster they usually serve up information. If they can be “energy self-sufficient,” all the better.

The risk associated with this latest location, part of the European Marine Energy Centre, is real. The tidal currents can move water at up to nine miles an hour at their peak, and 10-foot waves are common. It took a barge outfitted with a crane and 10 winches to lower the data center to the sea floor.

Facebook floats its own breakthrough in liquid cooling

While it doesn’t have the wow factor of the Microsoft project, Facebook is also talking up another first-of-its-kind system for keeping massive data centers from overheating.

In most of its locations, the social media company uses a combination of outdoor air venting and direct evaporative cooling methods to pull this off. The exact combination depends on where the data center is physically located — this approach doesn’t work everywhere, and in places where the climate is very humid or dusty, Facebook uses an indirect cooling system.

Building on the latter work, Facebook teamed up with heating, ventilation and air conditioning manufacturer Nortek Air Solutions to develop a new indirect cooling system that uses water instead of air to cool down data halls.

The main difference between this technology and its cousin is that it produces cold water instead of cold air, which is carefully filtered so that it can be reused with minimal maintenance. The unit can be used in combination with systems such as fan-coil walls, air handlers, in-row coolers, and so on rear-door heat exchangers, and chip cooling.

One big advantage of the system, according to Facebook, is that it will allow the company to build data centers in hot, humid climates where it’s difficult to do so today efficiently. The team notes:

The system will not only protect our servers and buildings from environmental factors, but it will also eliminate the need for mechanical cooling in a wider range of climate conditions and provide additional flexibility for data center design, requiring less square footage in order to cool effectively. While direct evaporative cooling continues to be the most efficient method to cool our data centers where environmental conditions allow, SPLC provides a more efficient indirect cooling system for a wider range of climates.

Intuitively, you might expect that this sort of approach might increase water consumption. But Facebook reports that based on its testing, the new technology could reduce water usage by about 20 percent in hot and humid climates.

By the way, Facebook calculates that the PUE across its data center portfolio is 1.10. It believes that its new system will operate “on par or better” than its direct cooling approaches — even in hot and humid climates.