A sea change for seafloor mapping

One of the biggest challenges in drawing ocean maps at any depth has been the relative lack of standardization in how data is shared. Read More

The Bedrock team (Chiau and DeMare are in background) launch a Bedrock AUV.

This article was adapted from the Climate Tech Weekly newsletter, delivered Wednesdays.

I’ve been fascinated with the ocean since I became an avid scuba diver two decades ago, a love I share with geographer and oceanographer Dawn Wright, chief scientist of geographic information systems software company Esri.

Something she shared with me during one of our first chats continues to tease my brain: We “know” more about the planets Venus and Mars, as well as the dark side of the moon, than we do about the ocean depths here on Earth. Astonishingly, we have mapped just 20 percent of the ocean floor (most of that area in the very recent past).

In a column published by Bloomberg and the Washington Post last month, Wright urges more government and private-sector funding to fill that gap. Why does this matter? Consider that the ocean absorbs about 24 percent of atmospheric CO2 — for perspective, forests and other vegetation handle an estimated 30 percent. Human activities — including trawl fishing and the deep-sea mining activities that some companies are beginning to contemplate — weaken the ocean’s sequestration potential. “To measure the progress of climate change and to study the ocean processes and human activities that affect that process, it is essential to assemble a detailed picture of the undersea world,” Wright writes.

The Seabed 2030 initiative, supported by the Nippon Foundation, is largely responsible for creating the maps we do have today. It’s using sensors carried on transoceanic ships, along with robots and other data-gathering technologies, to do the job.

One of the biggest challenges in drawing these maps at any depth has been the relative lack of standardization, a gap that sensor company Sofar Ocean Technologies — along with the Defense Advanced Projects Research Agency, the Office of Naval Research and research organization Oceankind — is proposing to fill with a platform called Bristlemouth (named after the weird-looking fish).

Sofar’s free-floating buoys gather information about water and climate conditions (temperature, currents, waves, shifting weather and so forth), which the maritime shipping industry uses to optimize the best routes for fuel efficiency. Sofar co-founder and CEO Tim Janssen likens the information to Google Maps or Waze, but for seaways instead of highways.

One of Sofar’s solar-powered marine buoys.

Sofar sells its collection buoys along with subscriptions to its data services. It hopes the new hardware connector standard will reduce the cost of integration and enable more organizations to share the information they’re collecting. “Fundamentally, we are trying to remove friction around scaling ocean applications,” Janssen told me.

Jason Thompson, CTO of Oceankind, which is investing in the standardization effort, noted: “We funded Bristlemouth to enable marine innovators to generate real-time subsurface insights that provide a greater understanding of our ocean environments to help advance science and technology needed to reverse growing climate threats.”

Diving deep

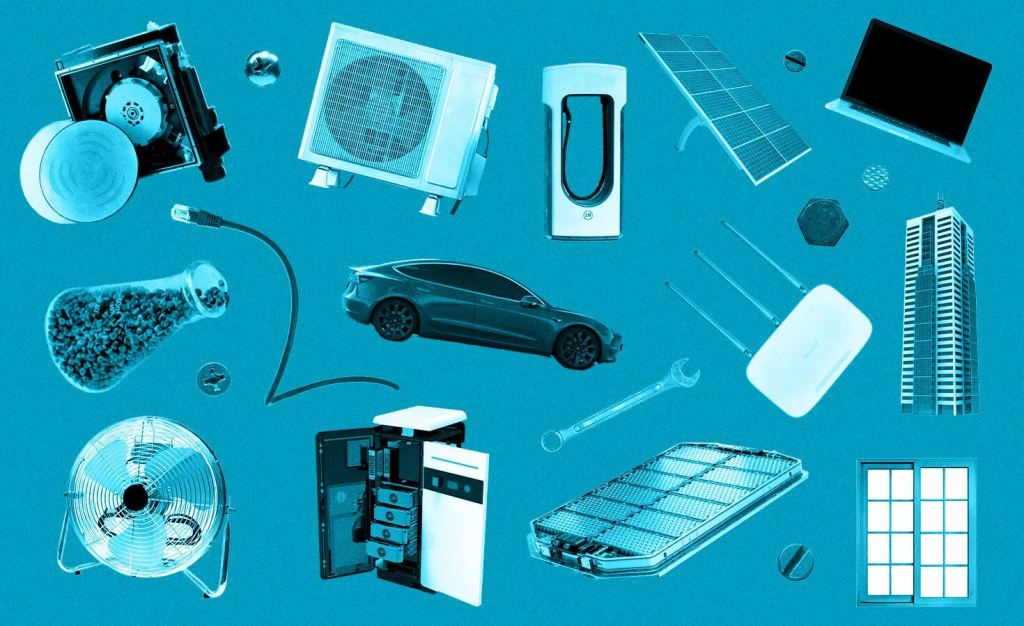

Another startup hoping to make waves in ocean mapping is Bedrock Ocean Exploration, co-founded by nautical data entrepreneur Anthony DiMare (Nautilus Labs) and Charles Chiau (SpaceX, Reliable Robotics, SRI International). The venture, which raised $8 million in seed funding in March, has developed a 7-foot-long, 100-pound “autonomous underwater vehicle” that scans the seafloor at depths up to .63 mile and reports back on what’s there — things such as manmade objects (even unexploded ordnance), rocks, vegetation and anomalies in the topography. It uses sonar that is sensitive to the health of marine mammals.

Bedrock’s commercial aspiration is to provide an accelerated data-gathering alternative for organizations that need ocean-floor surveys for projects such as offshore wind farms and undersea telecommunications and utilities cables. That information is needed to help justify construction in specific places, for insurance and permitting, and to watch for changes that could affect the integrity of a project. “Anyone that builds infrastructure in or on top of the ocean generally wants to know what is going on on the seafloor below,” DiMare told me.

The big challenge in gathering that data is the relatively lengthy lead time, which can easily extend six to 12 months. Bedrock is striving to cut that down to a month by creating a vehicle that can be shipped around to locations relatively easily (it fits in two standard Pelican cases) and by eliminating some links in the data collection and processing chain that can create bottlenecks.

The process costs about the same as the old way: a typical surveying project that includes four data sets might run $1 million to $10 million, depending on the area being surveyed, DiMare said. The data is shared in Bedrock’s cloud service, Mosaic.

Bedrock’s biggest challenge right now is keeping up with customer demand: There’s a wait list for its tiny submarine. It’s in the process of starting to build its fleet, and will need many more millions of dollars for that scale. “If we’re going to map the shallow ocean, we can’t just use ships,” DiMare said.

Two other companies to keep on your sonar: Terradepth, prototyping a 30-foot drone that can reach depths of 20,000 feet; and planblue, developing “underwater satellites” for this purpose.